- Get link

- X

- Other Apps

We began to rely on machine learning systems in everything from creating playlists to driving cars, but like any tool, they can be used for dangerous and unethical purposes, even accidentally. Today's illustration of this fact is a new article by researchers at Stanford who created a machine learning system that they claim can tell by several photos ... a homosexual before you. Or not.

Obviously, this study surprises and discourages equally. In addition to the fact that vulnerable groups of people will once again be subjected to a systematic invasion of privacy and intimate topics, all this directly affects the egalitarian view that we can not (and should not) judge a person by his appearance, and also do not have the right to guess sexual orientation on one or two pictures. Absurd? The accuracy of the system, which is reported in the work, meanwhile, leaves no room for doubt: now this is not only possible, but done.

The system catches emanations far more subtle than people are able to pick up - they see clues that you do not even know about. And she demonstrates, as it was intended, that private immunity, personal life in the era of ubiquitous computer vision can simply be destroyed.

Before proceeding to the discussion of the system itself, it is necessary to clarify that the study was conducted with good intentions. Of course, there are countless numbers of questions. The most relevant, perhaps, were the comments of Michal Kosinski and Ilun Wong regarding why the work generally saw the light:

"We were very concerned about these results and spent a lot of time making them public. We did not want to expose people to those risks, which we ourselves warned about. The ability to control when and to whom to disclose their sexual orientation is crucial not only for the welfare of the person, but also for his safety. "

"We believed that legislators and LGBTC communities should be aware of the risks they face. We did not create a tool to interfere in private life, rather they showed that simple and widely used methods pose a serious threat to personal life. "

Definitely, this is just one of many systematized attempts to extract sensitive information, such as sexuality, emotional state or medical indicators. But in this case everything is more serious, for several reasons.

Seeing things that can not (and do not)

In an article published in the Journal of Personality and Social Psychology, a completely conventional approach is described in detail, based on reinforcement learning, whose task is to identify the possibility of identifying people as homosexuals or not by their faces. Note: the work is still in draft form.

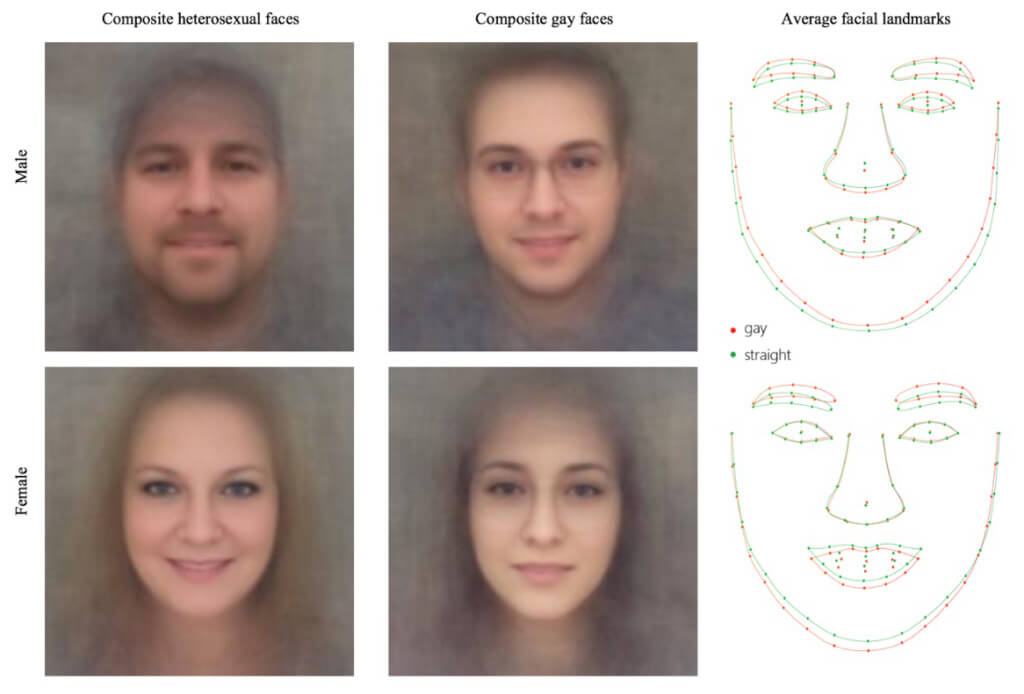

Using a database with images of individuals (which were taken from dating sites with open data), scientists collected 35,326 photographs of 14,776 people, including homosexual men and women equally represented. Their facial features were extracted and calculated: from the shape of the nose and eyebrows to the vegetation on the face and facial expressions.

A neural net of deep training has eaten all these traits, noticing which are usually associated with a particular sexual orientation. Scientists did not "sow" her prejudices about how ordinary people look with usual or homosexual orientation; the system simply distributed the features and correlated them with sexual preferences.

These patterns can be accessed to allow the computer to guess the sexual preferences of a person - and it turned out that the AI system does a much better job of this task than people do. The algorithm was able to correctly determine the orientation of 91% of the men shown to him and 83% of the women, in pairs at a time. People who were given the same pictures were right in 61% and 54% of cases, respectively - that is, a coin toss would have produced the same result.

Variations between the four groups are described in the second article; in addition to obvious behavioral differences, such as courtship of one group or similar makeup, the general trend was the presence of "female" features in gay men and "male" traits among lesbians.

The figure shows where there were traits pointing to sexual orientation

It should be noted that such an accuracy of the system was achieved under ideal conditions of choice between two people, one of whom is homosexual. When the system evaluated a group of 1,000 people, of which only 7% belonged to homosexual people (for more representativeness of the actual share of the population), its results were not the best. At best, a sample of 10 faces was recognized with 90 percent accuracy.

There is also a real possibility of bias in the system due to data: first, it included only young white Americans such as a man / woman and a homosexual / ordinary.

"Despite our attempts to obtain a more diverse sample, we were limited to studying white participants from the United States. Since prejudice against homosexuals and the use of online dating sites are unevenly distributed among different ethnic groups, we could not find enough non-white homosexual participants. "

Although scientists suggest that other ethnic groups are likely to have similar facial patterns and the system will also effectively determine them, in reality it needs to be confirmed, not expected.

It can also be argued that the classifier collected features characteristic of people on dating sites, not without the help of a self-selection mechanism - for example, American men with ordinary sexual orientation can purposely avoid the appearance that would show that they can be homosexual.

Of course, in the real effectiveness of this system it is reasonable to doubt, because it is trained on the basis of a limited amount of data and for the most part is effective on the examples from this pool. Further research will be necessary. However, it seems short-sighted to assume that such a working system is impossible.

In truth

Many argue that this technology is no better than "phrenology 2.0", a dummy connection of unrelated physical traits with complex internal processes, and this is understandable, but not true.

"Physiognomy today is universally and fairly rejected as a combination of superstitions and racism disguised as science," the scientists write in the introduction to the work (physiognomy judges by facial features, while phrenologists were engaged in the shape of the skull). But this pseudoscience collapsed, because its supporters moved in the opposite direction. They decided who was involved in "criminal cases" or "sexual deviations," and then determined which features are common to such people.

It turned out that people just do poorly with this kind of tasks - we see too many people in ourselves and make instinctive conclusions. But this does not mean that the visible traits and physiology or psychology are not connected. Nobody doubts that cars can mark in people the features they themselves do not see, from the tiny chemical traces of explosive shells and drugs to the subtle patterns on the MRI scans that talk about the beginning cancer.

It follows from the article that the computer vision system selects the same subtle patterns, both artificial and natural, but primarily the latter; scientists tried to focus on features that can not be changed easily. This supports the hypothesis that sexuality is determined biologically, possibly under the influence of various factors on the development of the fetus, which can lead to small physiological differences and hormonal schemes of attractiveness. The patterns observed by the system are similar to those predicted by the theory of prenatal hormones.

Hence the broader social and philosophical debate about love, sexuality, determinism, and the reduction of emotions and personalities to simple biological signs. But we will not talk about it. Let's touch on another serious problem relating to confidentiality.

Dangers and limitations

The consequences of automatically determining the orientation of a person on several photographs are enormous, above all and most important, because they put the people of LGBTK groups in jeopardy all over the world in those places where they remain an oppressed minority. The potential for abuse of such a system is huge and some may rightly disagree with the decision of researchers to create and document it.

But in the same way it can be argued that it is better to be able to open it to implement countermeasures or otherwise allow people to prepare for it. The difference is that you can hide the information that you post on-line; you can not control those who see your face. This supervised society in which we live, and hidden from the general view is less and less.

"Even the best laws and technologies aimed at protecting confidentiality, in our view, are doomed to failure," scientists write. "The digital environment is very complex for the police; data can be easily transferred across the border, steal or write without the consent of users. "

This problem is infinitely complex, but at the same time demonstrates the amazing possibilities of artificial intelligence. The consequences of such technologies, as usual, are ambiguous, but extremely interesting for the future of mankind and an individual. Scientists themselves are not particularly optimistic about the use of such technologies in the future, but they are realists in an era when the technology from which a rescue plan is expected is likely to be played by a villain.

"We believe that further erosion of private life is inevitable, and the security of gays and other minorities depends not on the right to privacy but on respect for human rights and tolerance of society and the state," they write. "To make the world in a post-privacy era safer and more hospitable, it should be populated by well-educated people who are radically intolerant of intolerance."

Technology will not save us from ourselves.

Based on materials Techcrunch

The article is based on materials .

- Get link

- X

- Other Apps

Comments

Post a Comment